Common problems taking ML from lab to production

A look into disappearing data and degraded performance preventing ML models from shipping

Written By: Charna Parkey, cross-posted from Kaskada on Aug 2020

Part 1: The continuous ML lifecycle

MLOps can empower us as data scientists to bring more of our models to production faster. But what is MLOps? The demand for using machine learning in every application is ever expanding, and the need for rapid and constantly improving performance has never been higher. To meet the demands, companies are adopting a machine learning operations (MLOps) culture to streamline the development, deployment, and management of production ML at scale.

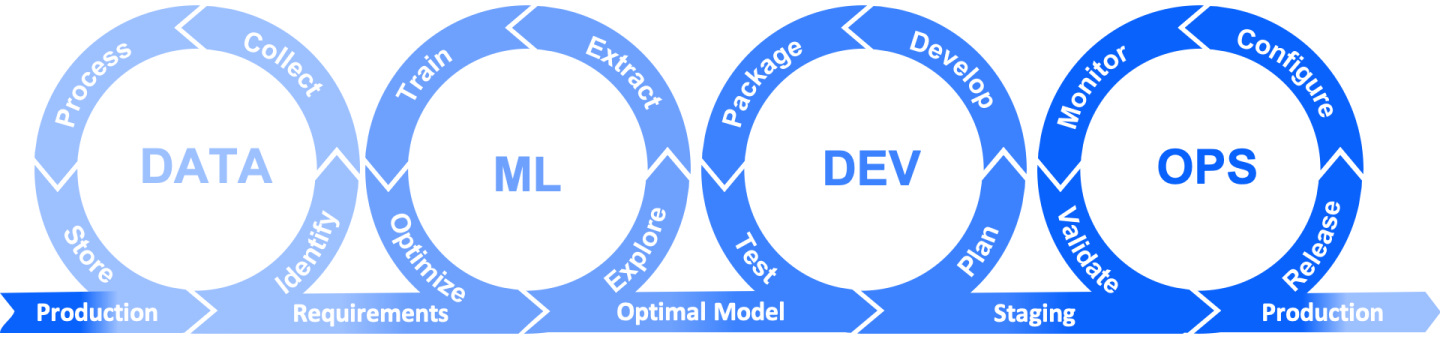

MLOps isn’t a single product or idea; it is a set of principles that allows the MLOps culture to exist easily. The goal is to speed up the time to production of your models, apply incremental improvements to models based on the changing environment, and create streamlined development and deployment processes. This sounds a lot like DevOps, but MLOps is a little different as machine learning operations include the data and models, in addition to the code and infrastructure.

Organizations adopting MLOps methodologies are changing the way that data scientists view and execute on the lifecycle of machine learning. It means we are not only feature engineering or training models on offline data, we now have partial ownership over deploying, monitoring and updating models at scale. 🎉

Your company is moving to (or considering) this direction. Not because it’s the trendy thing to do, but because it’s actually been shown to shorten the time it takes to go to production. They’ll also empower domain experts, like us, with continuous deployment and integration. But, how will things change, is it worth it, and how do we become good citizens in this new world?

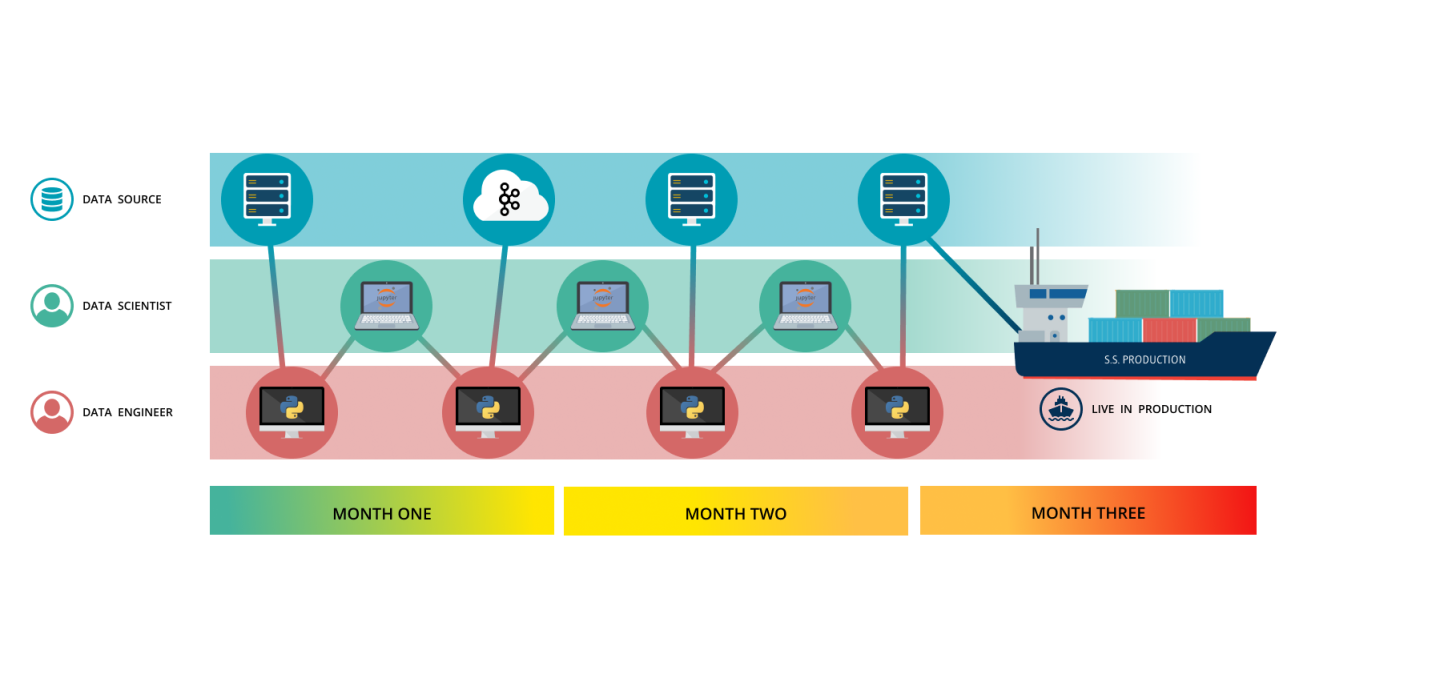

Today your team may be using a variety of experimental platforms, languages and frameworks that rarely produce production-ready models—leaving a new model to be translated by a different team. But, it’s hard to get the resources prioritized with the rest of the work your data, ML, and infrastructure teams already have on their plate. Plus, without an MLOps story, it is difficult to deploy and monitor your models.

Once a new model is prioritized to go to production, many times, performance takes a hit in the process. Without visibility into the simplifying assumptions that were made to get to production or how models are continuously performing, it’s difficult to iterate—leaving it hard to proactively make a business case for updating models. Often you have to notice when the shape of your data doesn’t look quite right on an offline model and guess that performance has degraded. Well, unless they fail spectacularly and sometimes publicly! We’re all familiar with these pressures, and this is where MLOps comes in to reduce the pressure of a single iteration and continued experimentation.

It turns out, just like the purpose of DevOps is to accelerate the software development lifecycle, the purpose of MLOps is to accelerate the ML development lifecycle. Here’s what to expect as your company begins to make the shift: you’ll adopt new tools, enjoy increased transparency, and implement new processes and potentially new team structures. All this comes with a culture shift. The benefits include deploying models in hours and not months, increased confidence, and knowing how your work is making an impact.

There are many diagrams that describe this continuous ML lifecycle. This one from a recent blog on modern MLOps gives us a framework to propose which technologies and processes allow us to accelerate any stage of the cycle.

In parts 2, 3 and 4 of this four part series, we’ll cover the tools that instrument the ML lifecycle, the processes that enable the people, and the culture shift that enables the full MLOps lifecycle. Until next time, have a great week and thanks for reading!